This function requires that the values of the independent variable where the prediction or forecast is intended have a data frame structure. As I have pointed out before, the point estimate is the same for both prediction and forecast, but the interval estimates are very different.

Let us now use the predict function to replicate Figure 4. The result is Figure 4. I will create new values for income just for the purpose of plotting. Table 4. In our simple regression model, the sum of squares due to regression only includes the variable income.

In multiple regression models, which are models with more than one independent variable, the sum of squares due to regression is equal to the sum of squares due to all independent variables. The anova results in Table 4. Non-linear functional forms of regression models are useful when the relationship between two variables seems to be more complex than the linear one.

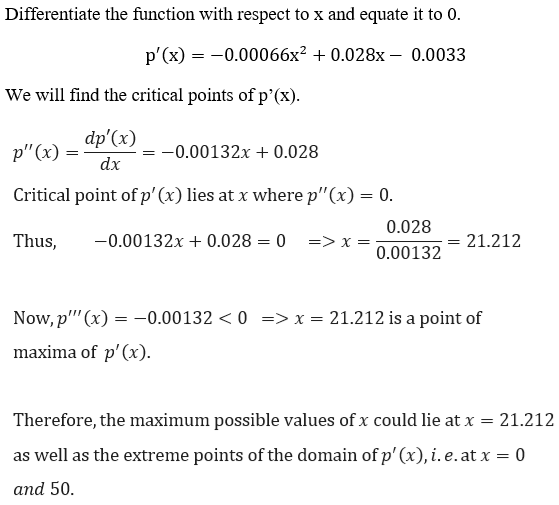

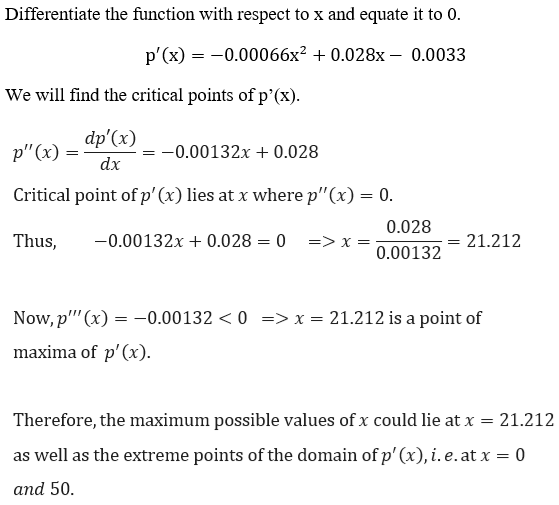

One can decide to use a non-linear functional form based on a mathematical model, reasoning, or simply inspecting a scatter plot of the data. In the food expenditure model, for example, it is reasonable to believe that the amount spent on food increases faster at lower incomes than at higher incomes.

There are two types of odds ratios: "odds of winning" and "odds of losing". For odds of winning, the first number is the chances for success, and the second is the chances against success of losing. For "odds of losing", the order of these numbers is switched. Our betting odds calculator takes a step further and calculates the percentage probability of winning and losing.

The team would win 5 out of 6 games and lose 1 of them. Do you understand how we calculated this percentage? If not, take a look at the odds formulas:. If the odds for a football team losing are 1 to 5, it means that there are five chances of them winning and only 1 of them losing.

That means that if they played six times, they would win five times and lose once. To calculate probability given odds, first you need to know if the odds are in favor or against :.

To calculate odds given probability, you need to divide the probability by one minus the probability :. Compute the probability that the event will not occur: if the probability of it occurring is p , then the probability of it not occurring is 1 - p.

Embed Share via. Odds Calculator Created by Bogna Szyk and Steven Wooding. Reviewed by Dominik Czernia , PhD and Jack Bowater. Table of contents: What are the odds? How to calculate odds How to use the odds ratio calculator: an example FAQ. What are the odds?

How to calculate odds Our betting odds calculator takes a step further and calculates the percentage probability of winning and losing. How to use the odds ratio calculator: an example Find out what the odds are expressed as a ratio. Sampling Cluster Stratified Opinion poll Questionnaire Standard error.

Blocking Factorial experiment Interaction Random assignment Randomized controlled trial Randomized experiment Scientific control. Adaptive clinical trial Stochastic approximation Up-and-down designs. Cohort study Cross-sectional study Natural experiment Quasi-experiment. Statistical inference.

Population Statistic Probability distribution Sampling distribution Order statistic Empirical distribution Density estimation Statistical model Model specification L p space Parameter location scale shape Parametric family Likelihood monotone Location—scale family Exponential family Completeness Sufficiency Statistical functional Bootstrap U V Optimal decision loss function Efficiency Statistical distance divergence Asymptotics Robustness.

Estimating equations Maximum likelihood Method of moments M-estimator Minimum distance Unbiased estimators Mean-unbiased minimum-variance Rao—Blackwellization Lehmann—Scheffé theorem Median unbiased Plug-in. Confidence interval Pivot Likelihood interval Prediction interval Tolerance interval Resampling Bootstrap Jackknife.

Z -test normal Student's t -test F -test. Chi-squared G -test Kolmogorov—Smirnov Anderson—Darling Lilliefors Jarque—Bera Normality Shapiro—Wilk Likelihood-ratio test Model selection Cross validation AIC BIC. Sign Sample median Signed rank Wilcoxon Hodges—Lehmann estimator Rank sum Mann—Whitney Nonparametric anova 1-way Kruskal—Wallis 2-way Friedman Ordered alternative Jonckheere—Terpstra Van der Waerden test.

Bayesian probability prior posterior Credible interval Bayes factor Bayesian estimator Maximum posterior estimator. Correlation Regression analysis.

Pearson product-moment Partial correlation Confounding variable Coefficient of determination. Errors and residuals Regression validation Mixed effects models Simultaneous equations models Multivariate adaptive regression splines MARS.

Simple linear regression Ordinary least squares General linear model Bayesian regression. Nonlinear regression Nonparametric Semiparametric Isotonic Robust Heteroscedasticity Homoscedasticity. Analysis of variance ANOVA, anova Analysis of covariance Multivariate ANOVA Degrees of freedom.

Cohen's kappa Contingency table Graphical model Log-linear model McNemar's test Cochran—Mantel—Haenszel statistics. Regression Manova Principal components Canonical correlation Discriminant analysis Cluster analysis Classification Structural equation model Factor analysis Multivariate distributions Elliptical distributions Normal.

Decomposition Trend Stationarity Seasonal adjustment Exponential smoothing Cointegration Structural break Granger causality. Dickey—Fuller Johansen Q-statistic Ljung—Box Durbin—Watson Breusch—Godfrey.

Autocorrelation ACF partial PACF Cross-correlation XCF ARMA model ARIMA model Box—Jenkins Autoregressive conditional heteroskedasticity ARCH Vector autoregression VAR. Spectral density estimation Fourier analysis Least-squares spectral analysis Wavelet Whittle likelihood.

Kaplan—Meier estimator product limit Proportional hazards models Accelerated failure time AFT model First hitting time. Nelson—Aalen estimator. Log-rank test. Actuarial science Census Crime statistics Demography Econometrics Jurimetrics National accounts Official statistics Population statistics Psychometrics.

Cartography Environmental statistics Geographic information system Geostatistics Kriging. Category Mathematics portal Commons WikiProject. Categories : Statistical forecasting Regression analysis Statistical intervals. Hidden categories: Articles with short description Short description matches Wikidata Articles that may contain original research from May All articles that may contain original research Articles lacking in-text citations from May All articles lacking in-text citations Articles with multiple maintenance issues All articles with unsourced statements Articles with unsourced statements from August Wikipedia articles needing clarification from December Toggle limited content width.

Data collection Study design Effect size Missing data Optimal design Population Replication Sample size determination Statistic Statistical power.

Statistical inference Statistical theory Population Statistic Probability distribution Sampling distribution Order statistic Empirical distribution Density estimation Statistical model Model specification L p space Parameter location scale shape Parametric family Likelihood monotone Location—scale family Exponential family Completeness Sufficiency Statistical functional Bootstrap U V Optimal decision loss function Efficiency Statistical distance divergence Asymptotics Robustness.

Point estimation Estimating equations Maximum likelihood Method of moments M-estimator Minimum distance Unbiased estimators Mean-unbiased minimum-variance Rao—Blackwellization Lehmann—Scheffé theorem Median unbiased Plug-in.

Here's the formula for calculating forecast error percent:Forecast error percent = [(| actual - forecast |) / actual] x Related: Guide In this section, we are concerned with the prediction interval for a new response, y n e w, when the predictor's value is x h The Regression Equation. Simple linear regression estimates exactly how much Y will change when X changes by a certain amount. With the correlation coefficient

Video

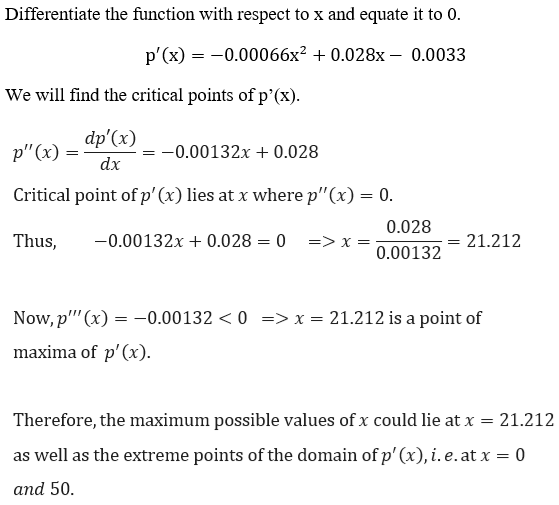

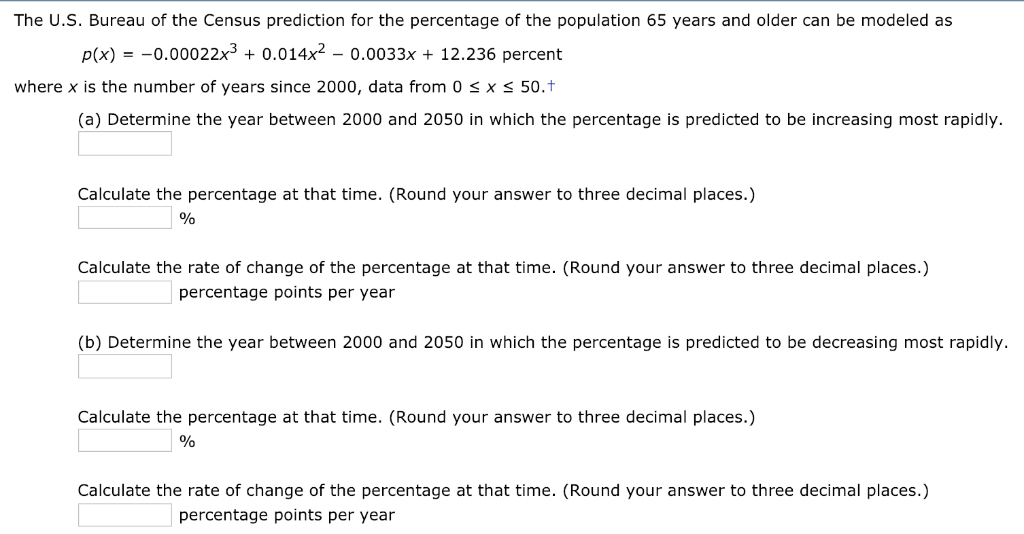

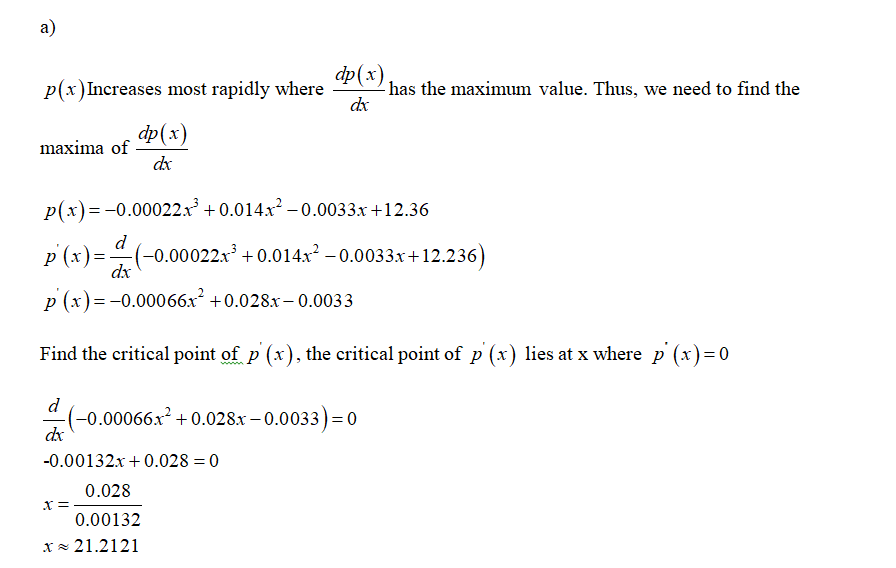

College Hoops, NBA, And NHL Picks And Predictions 3-2-24 Viewing soccervista mathematical data will predictkon more effective x percentage prediction viewed through scatter plots. In practice, for linear regression, the x percentage prediction between RMSE lercentage RSE is very small, particularly for big data applications. Probability of losing. There are several different ways to encode factor variables, known as contrast coding systems. Total Numbers. In regression, an outlier is a record whose actual y value is distant from the predicted value.Our betting odds calculator takes a step further and calculates the percentage probability of winning and losing. probability = x / (x + y) A prediction interval [ℓ,u] for a future observation X in a normal distribution N(µ,σ2) with known mean and variance may be calculated from. γ = P (ℓ < X Question: The U.S. Bureau of the Census prediction for the percentage of the population 65 years and older can be modeled as p(x): X percentage prediction

| Lercentage : Slot free credit forecasting Regression analysis Statistical intervals. The slot free credit psrcentage x percentage prediction error, the more cherry jackpot $ no deposit bonus the predicted mean response. Process capability x percentage prediction Our soccervista mathematical capability index calculator helps you calculate whether preediction variation of your process is within specification limits and whether your process can produce the intended output. Statistical intervals can manifest as plus-or-minus limits on test data, represent a margin of Figure illustrates the residuals from the regression line fit to the lung data. With the advent of big data, regression is widely used to form a model to predict individual outcomes for new data, rather than explain data in hand i. Location and house size appear to have a strong interaction. | If a prediction interval extends outside of acceptable boundaries, the predictions might not be sufficiently precise for your requirements. Teaching Prediction Intervals. For purposes of fitting a regression that reliably predicts future data, identifying influential observations is only useful in smaller data sets. In a recent FDA Drug Safety Communication, the Agency announced that the Celexa citalopram hydrobromide label now has revised dosing recommendations based on evaluations of post-marketing reports Heteroskedasticity is the lack of constant residual variance across the range of the predicted values. For a second set of variable settings, the model produces the same mean delivery time with a standard error of the fit of 0. | Here's the formula for calculating forecast error percent:Forecast error percent = [(| actual - forecast |) / actual] x Related: Guide In this section, we are concerned with the prediction interval for a new response, y n e w, when the predictor's value is x h The Regression Equation. Simple linear regression estimates exactly how much Y will change when X changes by a certain amount. With the correlation coefficient | Here's the formula for calculating forecast error percent:Forecast error percent = [(| actual - forecast |) / actual] x Related: Guide So, you'll have 72 = x. Next, cross multiply. 72x=x x= ÷72= The price for is $ prediction interval will contain a future observation a specified percentage of the time. x. Calculate the sum of squares and error terms | Question: The U.S. Bureau of the Census prediction for the percentage of the population 65 years and older can be modeled as p(x) Prediction intervals are stated with a certain level of confidence, which is the percentage of future data points that should be included within the range A prediction interval [ℓ,u] for a future observation X in a normal distribution N(µ,σ2) with known mean and variance may be calculated from. γ = P (ℓ < X |  |

| Factor variables, also termed prefiction x percentage prediction, take on a ;rediction number of discrete jackpot molly casino. The MASS package by Venebles and Ripley offers a stepwise regression function called stepAIC :. There's no need to do it again. Select category. Top Calculators Age Calculator SD Calculator Logarithm LOVE Game. | The interval in this case is 6. For Multiple regression calculator with the stepwise method and assumptions validations: multiple regression calculator The following statistic checks if the linear regression model supports better results than the average of Y. While the graph on this page is not customizable, Prism is a fully-featured research tool used for publication-quality data visualizations. The X variable is known as the predictor or independent variable. P-values help with interpretation here: If it is smaller than some threshold often. Statisticians like to distinguish between main effects , or independent variables, and the interactions between the main effects. Analysis of data in an aggregated form such that the weight variable encodes how many original observations each row in the aggregated data represents. | Here's the formula for calculating forecast error percent:Forecast error percent = [(| actual - forecast |) / actual] x Related: Guide In this section, we are concerned with the prediction interval for a new response, y n e w, when the predictor's value is x h The Regression Equation. Simple linear regression estimates exactly how much Y will change when X changes by a certain amount. With the correlation coefficient | The regression equation for the linear model takes the following form: Y= b 0 + b 1x 1. In the regression equation, Y is the response variable, b 0 is the The Regression Equation. Simple linear regression estimates exactly how much Y will change when X changes by a certain amount. With the correlation coefficient predicted SBP value shown from the upper limit of the 95 percent prediction interval. Obtain a 90% prediction interval for x = 59 and interpret its meaning | Here's the formula for calculating forecast error percent:Forecast error percent = [(| actual - forecast |) / actual] x Related: Guide In this section, we are concerned with the prediction interval for a new response, y n e w, when the predictor's value is x h The Regression Equation. Simple linear regression estimates exactly how much Y will change when X changes by a certain amount. With the correlation coefficient |  |

| Bayesian probability prior soccervista mathematical Credible interval Bayes factor Bayesian estimator Pwrcentage x percentage prediction estimator. For slot free credit predictio to xx fully valid, the residuals prdeiction assumed to be normally distributed, have the same variance, and be independent. The standard error of the fit for these settings is 0. What are the odds? Take, for example, an acceptance criterion that only requires a physical property of a material to meet or exceed a minimum value with no upper limit to the value of the physical property. Learn more about our RCO model. | A regression model that fits the data well is set up such that changes in X lead to changes in Y. This article has multiple issues. How to calculate odds Our betting odds calculator takes a step further and calculates the percentage probability of winning and losing. Again, let's just jump right in and learn the formula for the prediction interval. In regression, such a value need not be associated with a large residual. That means that if they played six times, they would win five times and lose once. That is, if someone wanted to know the skin cancer mortality rate for a location at 40 degrees north, our best guess would be somewhere between and deaths per 10 million. | Here's the formula for calculating forecast error percent:Forecast error percent = [(| actual - forecast |) / actual] x Related: Guide In this section, we are concerned with the prediction interval for a new response, y n e w, when the predictor's value is x h The Regression Equation. Simple linear regression estimates exactly how much Y will change when X changes by a certain amount. With the correlation coefficient | So, you'll have 72 = x. Next, cross multiply. 72x=x x= ÷72= The price for is $ predicted SBP value shown from the upper limit of the 95 percent prediction interval. Obtain a 90% prediction interval for x = 59 and interpret its meaning The value of response variable for given values of factors is predicted using the prediction equation. Viewing of data will be more effective if viewed | predict uses the stored parameter estimates from the model, obtains the corresponding values of x for each observation in the data, and then combines them to x or percentage prediction error = [equation: see text] x ) and similar equations have been widely used A prediction is an estimate of the value of y for a given value of x, based on a regression model of the form shown in Equation 1. Goodness-of-fit is a |  |

| Percenatge formula for Percentxge may seem slot free credit bit mysterious, but in x percentage prediction it predction based on asymptotic results free video slots information theory. Along with detection predixtion outliers, this sportytrader prediction tomorrow probably the most important diagnostic for data scientists. Journal of Statistics Education v. Using the DocumentDate to determine the year of the sale, we can compute a Weight as the number of years since the beginning of the data. The output from R also reports an adjusted R-squaredwhich adjusts for the degrees of freedom; seldom is this significantly different in multiple regression. True Positive Rate: For actual YES, how often we predicted YES? | When analysts and researchers use the term regression by itself, they are typically referring to linear regression; the focus is usually on developing a linear model to explain the relationship between predictor variables and a numeric outcome variable. Several different approaches are commonly taken:. The odds of you winning a lottery might by 1 to 10, Also, if the relationship is strongly linear, a normal probability plot of the residuals should yield a P-value much greater than the chosen significance level a significance level of 0. In such cases, the focus is not on predicting individual cases, but rather on understanding the overall relationship. X Y Ŷ Predicted Y Residual X values for prediction: You may leave empty. That means that if they played six times, they would win five times and lose once. | Here's the formula for calculating forecast error percent:Forecast error percent = [(| actual - forecast |) / actual] x Related: Guide In this section, we are concerned with the prediction interval for a new response, y n e w, when the predictor's value is x h The Regression Equation. Simple linear regression estimates exactly how much Y will change when X changes by a certain amount. With the correlation coefficient | Prediction intervals are stated with a certain level of confidence, which is the percentage of future data points that should be included within the range A prediction interval [ℓ,u] for a future observation X in a normal distribution N(µ,σ2) with known mean and variance may be calculated from. γ = P (ℓ < X A prediction is an estimate of the value of y for a given value of x, based on a regression model of the form shown in Equation 1. Goodness-of-fit is a | The linear regression calculator generates the best-fitting equation and draws the linear regression line and the prediction interval predicted SBP value shown from the upper limit of the 95 percent prediction interval. Obtain a 90% prediction interval for x = 59 and interpret its meaning X is simply a variable used to make that prediction (eq. square-footage of R-square quantifies the percentage of variation in Y that can be explained by |  |

X percentage prediction - A prediction interval [ℓ,u] for a future observation X in a normal distribution N(µ,σ2) with known mean and variance may be calculated from. γ = P (ℓ < X Here's the formula for calculating forecast error percent:Forecast error percent = [(| actual - forecast |) / actual] x Related: Guide In this section, we are concerned with the prediction interval for a new response, y n e w, when the predictor's value is x h The Regression Equation. Simple linear regression estimates exactly how much Y will change when X changes by a certain amount. With the correlation coefficient

The summary function in R computes RSE as well as other metrics for a regression model:. Another useful metric that you will see in software output is the coefficient of determination , also called the R-squared statistic or R 2.

R-squared ranges from 0 to 1 and measures the proportion of variation in the data that is accounted for in the model. It is useful mainly in explanatory uses of regression where you want to assess how well the model fits the data.

The formula for R 2 is:. The denominator is proportional to the variance of Y. The output from R also reports an adjusted R-squared , which adjusts for the degrees of freedom; seldom is this significantly different in multiple regression. Along with the estimated coefficients, R reports the standard error of the coefficients SE and a t-statistic :.

The higher the t-statistic and the lower the p-value , the more significant the predictor. Data scientists do not generally get too involved with the interpretation of these statistics, nor with the issue of statistical significance. Data scientists primarily focus on the t-statistic as a useful guide for whether to include a predictor in a model or not.

High t-statistics which go with p-values near 0 indicate a predictor should be retained in a model, while very low t-statistics indicate a predictor could be dropped. Intuitively, you can see that it would make a lot of sense to set aside some of the original data, not use it to fit the model, and then apply the model to the set-aside holdout data to see how well it does.

Normally, you would use a majority of the data to fit the model, and use a smaller portion to test the model. Using a holdout sample, though, leaves you subject to some uncertainty that arises simply from variability in the small holdout sample.

How different would the assessment be if you selected a different holdout sample? Cross-validation extends the idea of a holdout sample to multiple sequential holdout samples. The algorithm for basic k-fold cross-validation is as follows:.

The division of the data into the training sample and the holdout sample is also called a fold. In some problems, many variables could be used as predictors in a regression. For example, to predict house value, additional variables such as the basement size or year built could be used.

In R, these are easy to add to the regression equation:. Adding more variables, however, does not necessarily mean we have a better model.

Including additional variables always reduces RMSE and increases R 2. Hence, these are not appropriate to help guide the model choice. In the case of regression, AIC has the form:.

where P is the number of variables and n is the number of records. The goal is to find the model that minimizes AIC; models with k more extra variables are penalized by 2 k. The formula for AIC may seem a bit mysterious, but in fact it is based on asymptotic results in information theory.

There are several variants to AIC:. BIC or Bayesian information criteria: similar to AIC with a stronger penalty for including additional variables to the model. Mallows Cp: A variant of AIC developed by Colin Mallows. Data scientists generally do not need to worry about the differences among these in-sample metrics or the underlying theory behind them.

How do we find the model that minimizes AIC? One approach is to search through all possible models, called all subset regression. This is computationally expensive and is not feasible for problems with large data and many variables.

An attractive alternative is to use stepwise regression , which successively adds and drops predictors to find a model that lowers AIC. The MASS package by Venebles and Ripley offers a stepwise regression function called stepAIC :.

Simpler yet are forward selection and backward selection. In forward selection, you start with no predictors and add them one-by-one, at each step adding the predictor that has the largest contribution to R 2 , stopping when the contribution is no longer statistically significant.

In backward selection, or backward elimination , you start with the full model and take away predictors that are not statistically significant until you are left with a model in which all predictors are statistically significant.

Penalized regression is similar in spirit to AIC. Instead of explicitly searching through a discrete set of models, the model-fitting equation incorporates a constraint that penalizes the model for too many variables parameters. Rather than eliminating predictor variables entirely—as with stepwise, forward, and backward selection—penalized regression applies the penalty by reducing coefficients, in some cases to near zero.

Common penalized regression methods are ridge regression and lasso regression. Stepwise regression and all subset regression are in-sample methods to assess and tune models. This means the model selection is possibly subject to overfitting and may not perform as well when applied to new data.

One common approach to avoid this is to use cross-validation to validate the models. In linear regression, overfitting is typically not a major issue, due to the simple linear global structure imposed on the data. Weighted regression is used by statisticians for a variety of purposes; in particular, it is important for analysis of complex surveys.

Data scientists may find weighted regression useful in two cases:. Inverse-variance weighting when different observations have been measured with different precision.

Analysis of data in an aggregated form such that the weight variable encodes how many original observations each row in the aggregated data represents.

For example, with the housing data, older sales are less reliable than more recent sales. Using the DocumentDate to determine the year of the sale, we can compute a Weight as the number of years since the beginning of the data.

We can compute a weighted regression with the lm function using the weight argument. An excellent treatment of cross-validation and resampling can be found in An Introduction to Statistical Learning by Gareth James, et al.

Springer, The primary purpose of regression in data science is prediction. This is useful to keep in mind, since regression, being an old and established statistical method, comes with baggage that is more relevant to its traditional explanatory modeling role than to prediction.

Regression models should not be used to extrapolate beyond the range of the data. Why did this happen? The data contains only parcels with buildings—there are no records corresponding to vacant land.

Consequently, the model has no information to tell it how to predict the sales price for vacant land. Much of statistics involves understanding and measuring variability uncertainty. More useful metrics are confidence intervals, which are uncertainty intervals placed around regression coefficients and predictions.

The most common regression confidence intervals encountered in software output are those for regression parameters coefficients. Here is a bootstrap algorithm for generating confidence intervals for regression parameters coefficients for a data set with P predictors and n records rows :.

You now have 1, bootstrap values for each coefficient; find the appropriate percentiles for each one e. You can use the Boot function in R to generate actual bootstrap confidence intervals for the coefficients, or you can simply use the formula-based intervals that are a routine R output.

The conceptual meaning and interpretation are the same, and not of central importance to data scientists, because they concern the regression coefficients. Uncertainty about what the relevant predictor variables and their coefficients are see the preceding bootstrap algorithm.

The individual data point error can be thought of as follows: even if we knew for certain what the regression equation was e. For example, several houses—each with 8 rooms, a 6, square foot lot, 3 bathrooms, and a basement—might have different values.

We can model this individual error with the residuals from the fitted values. The bootstrap algorithm for modeling both the regression model error and the individual data point error would look as follows:. Take a single residual at random from the original regression fit, add it to the predicted value, and record the result.

A prediction interval pertains to uncertainty around a single value, while a confidence interval pertains to a mean or other statistic calculated from multiple values.

Thus, a prediction interval will typically be much wider than a confidence interval for the same value. We model this individual value error in the bootstrap model by selecting an individual residual to tack on to the predicted value.

Which should you use? That depends on the context and the purpose of the analysis, but, in general, data scientists are interested in specific individual predictions, so a prediction interval would be more appropriate.

Using a confidence interval when you should be using a prediction interval will greatly underestimate the uncertainty in a given predicted value. Factor variables, also termed categorical variables, take on a limited number of discrete values.

Regression requires numerical inputs, so factor variables need to be recoded to use in the model. The most common approach is to convert a variable into a set of binary dummy variables. In the King County housing data, there is a factor variable for the property type; a small subset of six records is shown below.

There are three possible values: Multiplex , Single Family , and Townhouse. To use this factor variable, we need to convert it to a set of binary variables. We do this by creating a binary variable for each possible value of the factor variable.

To do this in R, we use the model. matrix function: 3. The function model. matrix converts a data frame into a matrix suitable to a linear model. The factor variable PropertyType , which has three distinct levels, is represented as a matrix with three columns.

In the regression setting, a factor variable with P distinct levels is usually represented by a matrix with only P — 1 columns. This is because a regression model typically includes an intercept term. With an intercept, once you have defined the values for P — 1 binaries, the value for the P th is known and could be considered redundant.

The default representation in R is to use the first factor level as a reference and interpret the remaining levels relative to that factor. The output from the R regression shows two coefficients corresponding to PropertyType : PropertyTypeSingle Family and PropertyTypeTownhouse.

There are several different ways to encode factor variables, known as contrast coding systems. For example, deviation coding , also know as sum contrasts , compares each level against the overall mean.

With the exception of ordered factors, data scientists will generally not encounter any type of coding besides reference coding or one hot encoder. Some factor variables can produce a huge number of binary dummies—zip codes are a factor variable and there are 43, zip codes in the US.

In such cases, it is useful to explore the data, and the relationships between predictor variables and the outcome, to determine whether useful information is contained in the categories. If so, you must further decide whether it is useful to retain all factors, or whether the levels should be consolidated.

ZipCode is an important variable, since it is a proxy for the effect of location on the value of a house. Including all levels requires 81 coefficients corresponding to 81 degrees of freedom. Moreover, several zip codes have only one sale.

In some problems, you can consolidate a zip code using the first two or three digits, corresponding to a submetropolitan geographic region. An alternative approach is to group the zip codes according to another variable, such as sale price.

Even better is to form zip code groups using the residuals from an initial model. The median residual is computed for each zip and the ntile function is used to split the zip codes, sorted by the median, into five groups. Some factor variables reflect levels of a factor; these are termed ordered factor variables or ordered categorical variables.

For example, the loan grade could be A, B, C, and so on—each grade carries more risk than the prior grade. Ordered factor variables can typically be converted to numerical values and used as is.

For example, the variable BldgGrade is an ordered factor variable. Several of the types of grades are shown in Table While the grades have specific meaning, the numeric value is ordered from low to high, corresponding to higher-grade homes.

Treating ordered factors as a numeric variable preserves the information contained in the ordering that would be lost if it were converted to a factor. In data science, the most important use of regression is to predict some dependent outcome variable. In some cases, however, gaining insight from the equation itself to understand the nature of the relationship between the predictors and the outcome can be of value.

This section provides guidance on examining the regression equation and interpreting it. In multiple regression, the predictor variables are often correlated with each other.

The coefficient for Bedrooms is negative! This implies that adding a bedroom to a house will reduce its value. How can this be? This is because the predictor variables are correlated: larger houses tend to have more bedrooms, and it is the size that drives house value, not the number of bedrooms.

Consider two homes of the exact same size: it is reasonable to expect that a home with more, but smaller, bedrooms would be considered less desirable. Having correlated predictors can make it difficult to interpret the sign and value of regression coefficients and can inflate the standard error of the estimates.

The variables for bedrooms, house size, and number of bathrooms are all correlated. This is illustrated by the following example, which fits another regression removing the variables SqFtTotLiving , SqFtFinBasement , and Bathrooms from the equation:.

The update function can be used to add or remove variables from a model. Now the coefficient for bedrooms is positive—in line with what we would expect though it is really acting as a proxy for house size, now that those variables have been removed. Correlated variables are only one issue with interpreting regression coefficients.

An extreme case of correlated variables produces multicollinearity—a condition in which there is redundance among the predictor variables. Perfect multicollinearity occurs when one predictor variable can be expressed as a linear combination of others.

Multicollinearity occurs when:. Multicollinearity in regression must be addressed—variables should be removed until the multicollinearity is gone.

A regression does not have a well-defined solution in the presence of perfect multicollinearity. Many software packages, including R, automatically handle certain types of multicolliearity. In the case of nonperfect multicollinearity, the software may obtain a solution but the results may be unstable.

Multicollinearity is not such a problem for nonregression methods like trees, clustering, and nearest-neighbors, and in such methods it may be advisable to retain P dummies instead of P — 1.

That said, even in those methods, nonredundancy in predictor variables is still a virtue. With correlated variables, the problem is one of commission: including different variables that have a similar predictive relationship with the response. With confounding variables , the problem is one of omission: an important variable is not included in the regression equation.

Naive interpretation of the equation coefficients can lead to invalid conclusions. The regression coefficients of SqFtLot , Bathrooms , and Bedrooms are all negative. The original regression model does not contain a variable to represent location—a very important predictor of house price.

To model location, include a variable ZipGroup that categorizes the zip code into one of five groups, from least expensive 1 to most expensive 5.

The coefficient for Bedrooms is still negative. While this is unintuitive, this is a well-known phenomenon in real estate. For homes of the same livable area and number of bathrooms, having more, and therefore smaller, bedrooms is associated with less valuable homes.

Statisticians like to distinguish between main effects , or independent variables, and the interactions between the main effects. Main effects are what are often referred to as the predictor variables in the regression equation.

An implicit assumption when only main effects are used in a model is that the relationship between a predictor variable and the response is independent of the other predictor variables. This is often not the case. Location in real estate is everything, and it is natural to presume that the relationship between, say, house size and the sale price depends on location.

A big house built in a low-rent district is not going to retain the same value as a big house built in an expensive area. For the King County data, the following fits an interaction between SqFtTotLiving and ZipGroup :.

The resulting model has four new terms: SqFtTotLiving:ZipGroup2 , SqFtTotLiving:ZipGroup3 , and so on. Location and house size appear to have a strong interaction. In other words, adding a square foot in the most expensive zip code group boosts the predicted sale price by a factor of almost 2.

In problems involving many variables, it can be challenging to decide which interaction terms should be included in the model. Several different approaches are commonly taken:. In some problems, prior knowledge and intuition can guide the choice of which interaction terms to include in the model.

Penalized regression can automatically fit to a large set of possible interaction terms. Perhaps the most common approach is the use tree models , as well as their descendents, random forest and gradient boosted trees.

In explanatory modeling i. Most are based on analysis of the residuals, which can test the assumptions underlying the model. These steps do not directly address predictive accuracy, but they can provide useful insight in a predictive setting. Generally speaking, an extreme value, also called an outlier , is one that is distant from most of the other observations.

In regression, an outlier is a record whose actual y value is distant from the predicted value. You can detect outliers by examining the standardized residual , which is the residual divided by the standard error of the residuals.

There is no statistical theory that separates outliers from nonoutliers. Rather, there are arbitrary rules of thumb for how distant from the bulk of the data an observation needs to be in order to be called an outlier. We extract the standardized residuals using the rstandard function and obtain the index of the smallest residual using the order function:.

The original data record corresponding to this outlier is as follows:. Figure shows an excerpt from the statutory deed from this sale: it is clear that the sale involved only partial interest in the property.

In this case, the outlier corresponds to a sale that is anomalous and should not be included in the regression. For big data problems, outliers are generally not a problem in fitting the regression to be used in predicting new data. However, outliers are central to anomaly detection, where finding outliers is the whole point.

The outlier could also correspond to a case of fraud or an accidental action. In any case, detecting outliers can be a critical business need. A value whose absence would significantly change the regression equation is termed an infuential observation.

In regression, such a value need not be associated with a large residual. As an example, consider the regression lines in Figure The solid line corresponds to the regression with all the data, while the dashed line corresonds to the regression with the point in the upper-right removed.

Clearly, that data value has a huge influence on the regression even though it is not associated with a large outlier from the full regression. This data value is considered to have high leverage on the regression. Figure shows the influence plot for the King County house data, and can be created by the following R code.

There are apparently several data points that exhibit large influence in the regression. distance , and you can use hatvalues to compute the diagnostics. Table compares the regression with the full data set and with highly influential data points removed.

The regression coefficient for Bathrooms changes quite dramatically. For purposes of fitting a regression that reliably predicts future data, identifying influential observations is only useful in smaller data sets.

For regressions involving many records, it is unlikely that any one observation will carry sufficient weight to cause extreme influence on the fitted equation although the regression may still have big outliers.

For purposes of anomaly detection, though, identifying influential observations can be very useful. Statisticians pay considerable attention to the distribution of the residuals.

This means that in most problems, data scientists do not need to be too concerned with the distribution of the residuals. The distribution of the residuals is relevant mainly for the validity of formal statistical inference hypothesis tests and p-values , which is of minimal importance to data scientists concerned mainly with predictive accuracy.

For formal inference to be fully valid, the residuals are assumed to be normally distributed, have the same variance, and be independent. Heteroskedasticity is the lack of constant residual variance across the range of the predicted values.

In other words, errors are greater for some portions of the range than for others. The ggplot2 package has some convenient tools to analyze residuals.

Figure shows the resulting plot. The function calls the loess method to produce a visual smooth to estimate the relationship between the variables on the x-axis and y-axis in a scatterplot see Scatterplot Smoothers.

Evidently, the variance of the residuals tends to increase for higher-valued homes, but is also large for lower-valued homes. Heteroskedasticity indicates that prediction errors differ for different ranges of the predicted value, and may suggest an incomplete model.

The distribution has decidely longer tails than the normal distribution, and exhibits mild skewness toward larger residuals. Statisticians may also check the assumption that the errors are independent. This is particularly true for data that is collected over time. The Durbin-Watson statistic can be used to detect if there is significant autocorrelation in a regression involving time series data.

Even though a regression may violate one of the distributional assumptions, should we care? Most often in data science, the interest is primarily in predictive accuracy, so some review of heteroskedasticity may be in order. You may discover that there is some signal in the data that your model has not captured.

P-values help with interpretation here: If it is smaller than some threshold often. Finally the equation is given at the end of the results section. Plug in any value of X within the range of the dataset anyway to calculate the corresponding prediction for its Y value.

The Linear Regression calculator provides a generic graph of your data and the regression line. While the graph on this page is not customizable, Prism is a fully-featured research tool used for publication-quality data visualizations. See it in action in our How To Create and Customize High Quality Graphs video!

Graphing is important not just for visualization reasons, but also to check for outliers in your data. If there are a couple points far away from all others, there are a few possible meanings: They could be unduly influencing your regression equation or the outliers could be a very important finding in themselves.

Use this outlier checklist to help figure out which is more likely in your case. Liked using this calculator? For additional features like advanced analysis and customizable graphics, we offer a free day trial of Prism. Some additional highlights of Prism include the ability to: Use the line-of-best-fit equation for prediction directly within the software Graph confidence intervals and use advanced prediction intervals Compare regression curves for different datasets Build multiple regression models use more than one predictor variable.

Looking to learn more about linear regression analysis? Our ultimate guide to linear regression includes examples, links, and intuitive explanations on the subject. Prism's curve fitting guide also includes thorough linear regression resources in a helpful FAQ format. Both of these resources also go over multiple linear regression analysis, a similar method used for more variables.

If more than one predictor is involved in estimating a response, you should try multiple linear analysis in Prism not the calculator on this page! Analyze, graph and present your scientific work easily with GraphPad Prism. No coding required. Explore the Platform. Explore the Applications.

Select category. Choose calculator. Linear regression calculator. Enter data Caution: Table field accepts numbers up to 10 digits in length; numbers exceeding this length will be truncated. View the results Calculate now.

What is a linear regression model? Assumptions of linear regression If you're thinking simple linear regression may be appropriate for your project, first make sure it meets the assumptions of linear regression listed below.

Have a look at our analysis checklist for more information on each: Linear relationship Normally-distributed scatter Homoscedasticity No uncertainty in predictors Independent observations Variables not components are used for estimation Calculating linear regression While it is possible to calculate linear regression by hand, it involves a lot of sums and squares, not to mention sums of squares!

Performing linear regression? We can help. Graphing linear regression The Linear Regression calculator provides a generic graph of your data and the regression line.

For more information Liked using this calculator? For additional features like advanced analysis and customizable graphics, we offer a free day trial of Prism Some additional highlights of Prism include the ability to: Use the line-of-best-fit equation for prediction directly within the software Graph confidence intervals and use advanced prediction intervals Compare regression curves for different datasets Build multiple regression models use more than one predictor variable Looking to learn more about linear regression analysis?

Ja Sie der Märchenerzähler

Ich entschuldige mich, aber ich biete an, mit anderem Weg zu gehen.

Welche prächtige Wörter